Michael Peterson

Director Research & Innovation

SpinSys

Introduction

This post is part of our continued exploration into ways that machine learning can be utilized in the medical domain. In our exploration of discovering innovative ways to utilize machine learning, we came to learn that in 2015-2016 the prevalence of obesity in adults in the United States was at 39.8%. This meant that 93.3 million Americans were affected by the disease.

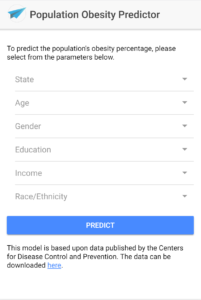

The Centers for Disease Control and Prevention (CDC) collects various healthcare data from the Behavioral Risk Factor Surveillance System (BRFSS) through telephone surveys. The obesity related data is gathered, stratified and published for public consumption on the healthdata.gov website. It can be downloaded as JSON, CSV, XML and RDF. The data is categorized by state, age, gender, education, income and race/ethnicity. This format works well if you are interested in viewing it by only one category, but we wondered if you could utilize machine learning to predict obesity rates across multiple categories.

This post will walk you through how we were able to train a model that can predict obesity rates across locations, ages, genders, educations, incomes and race/ethnicity. You can view our progressive web app (PWA) prototype that is driven by the model here. It is designed to be viewable from a mobile device.

We will begin with an overview of machine learning concepts that will appeal to a larger audience and then we will drill down into the technical implementation details. The implementation details may require some prior knowledge of machine learning concepts and is intended for a technical audience.

Machine Learning Overview

Machine learning (ML), an application of artificial intelligence (AI), involves using algorithms that can learn from data and make predictions based upon it. Machine learning techniques can be categorized into three broad areas:

- Supervised Learning

- Unsupervised Learning

- Reinforcement Learning

In supervised learning, the label, or expected result, is included in the data that is fed into the model. The data is used to train the model to make future predictions. Our prediction of obesity rates is an example of supervised learning. Other examples of supervised learning include image recognition and sentiment analysis. In unsupervised learning, the expected results are unknown and it is up to the model to infer relationships and structure in the data. Uses of unsupervised learning include genomics and behavioral-based detection in network security. Reinforcement learning can be summarized as “learn as you go”. The algorithm makes decisions, continuously receives feedback and modifies future decisions based upon the feedback. You can find reinforcement learning being used in many places from elevator scheduling to robotics.

ML.NET Overview

There are several machine learning frameworks and libraries available for use. The majority of them require Python for developing, training and evaluating models. In May of 2018, Microsoft announced the release of ML.NET, a cross-platform, open source machine learning framework. This framework has been under development by Microsoft Research over the last decade and is utilized by various Microsoft products including Windows, Bing, Azure and Office. ML.NET is supported on Windows, Linux and macOS. If Python is not available in your environment or your team is not familiar with it, ML.NET can be used to train and consume models using C# or F#. Its current release includes support for both classification and regression. ML.NET’s roadmap has support for popular deep learning libraries like TensorFlow, Caffe2, and CNTK coming in the future.

To try it out, we utilized ML.NET and C# to build our obesity prediction model.

Identifying the Dataset

While building an obesity prediction model, the first thing we had to determine was how the data could be leveraged for training and evaluating the model. The downloaded CDC dataset is pre-aggregated and stratified by location and other various categories. It also includes various lifestyle questions such as information about the frequency of physical activity and the consumption of vegetables. In the future, we could expand our model to include and correlate these elements as well, but for our obesity model we decided to work only with the obesity rates. We removed the unnecessary rows and columns so that the data could be easily ingested.

Building the Model

Once we had our dataset defined, we needed to choose an algorithm and develop the model. With ML.NET, our choice for algorithm included classification, binary and multi-class, clustering and regression. Since we wanted to predict a numeric value based upon various parameters, we chose to use regression. After we had selected an algorithm we needed to construct our learning pipeline.

A learning pipeline holds all the steps of the learning process, such as the data retrieval and transformations. The first thing we needed to define in our learning pipeline was the data ingestion:

new TextLoader(ObesityDataPath).CreateFrom<ObesityData>(separator:',')

We used a text loader that reads our input CSV and parses it into our ObesityData class. Next we had to define the labels and features. When ML.NET starts training, it expects two columns: “Label” and “Features”. Labels are the values that are predicted. Features are the parameters or attributes associated to those labels. If you have a field named “Label” in your dataset, then the next line of code is not needed, however, since our dataset doesn’t have a column titled “Label” we used a column copier:

new ColumnCopier(("DataValue", "Label"))

Once our label was defined, we needed to define our features. We first needed to use a vectorizer to transform our categorical (string) values into 0/1 vectors:

new CategoricalOneHotVectorizer("LocationAbbr", "Age", "Education", "Gender", "Income", "Race")

We then used a concatenator to combine them all into the “Features” column:

new ColumnConcatenator("Features", "LocationAbbr", "Age", "Education", "Gender", "Income", "Race")

The last thing we needed for our learning pipeline was the algorithm to be used to train the model:

new FastTreeRegressor()

Training and Evaluating the Model

After we had defined our learning pipeline, we needed to setup an app to perform the training and evaluation of the model. Our dataset included survey results from 2011-2016. The 2011-2015 were used for training and 2016 results were used for evaluation. To train the model, we executed:

var model = pipeline.Train<ObesityData, ObesityPrediction>();

We then saved our model as a ZIP file:

await model.WriteAsync(ModelPath);

Once our model had been persisted to disk, we used our 2016 evaluation data to determine its accuracy. After using a text loader to load the evaluation data, we instantiated an evaluator with the following code:

var evaluator = new RegressionEvaluator();

We then evaluated and returned various metrics regarding the accuracy of our model:

var metrics = evaluator.Evaluate(model, testData);

We were interested in the “Rms” property that contains the root mean square loss, or RMC, which is the square root of the L2 loss. Ideally it should be around 2.8 and can be improved by increasing the size of the training dataset. The other property we were interested in checking was the “Rsquared” value of the model, which is also known as the coefficient of determination. This value ranges from 0 to 1 and should be as close to 1 as possible.

Model Consumption

After we had trained and evaluated the accuracy of our model, it was ready to be consumed. Models can be consumed in applications such as our Population Obesity Predictor. They can also be used to generate datasets across various dimensions. The resulting datasets could then be ingested into OLAP models for visualization in various BI tools. For our obesity predictor PWA, we decided to use an AWS Lambda function, also written in C#, to predict obesity rates based upon the specified parameters:

var model = await Microsoft.ML.PredictionModel.ReadAsync<ObesityData, ObesityPrediction>("ObesityModel.zip"); var prediction = model.Predict(new ObesityData { LocationAbbr = state, Age = age, Education = education, Income = income, Gender = gender, Race = race });

Once we unit tested and published our Lambda function, we created an endpoint in the AWS API Gateway to expose it via a RESTful service, and then uploaded our PWA to a S3 bucket to make it available to the public.

Conclusion

Machine Learning has many potential applications from network security to robotics. There are many applications of it in healthcare as well. Although ML.NET was made available to the public just last month, it seems to be very well thought out and can be used to quickly integrate machine learning models into enterprise applications. We are looking forward to their support of deep learning frameworks in the future and intend to publish additional posts covering their support once it becomes available.